If you develop your own algorithms to test on OES, please consider building upon OpenHAIV, an open-source framework that we use to perform the above tasks for open-world sensing.

Full-spectrum Out-of-Distribution (OOD) Detection

Semantic Shift OOD Detection & OSR

Recent work highlights a strong correlation between OOD detection and OSR in both settings and performance. Both tasks detect new categories with shifted semantics, while OSR also requires maintaining in-distribution (ID) accuracy. OES supports evaluation of a model’s ability to handle semantic shifts. Unlike existing remote sensing benchmarks that randomly split ID and OOD samples, OES consider the semantic shift degree between coarse and fine classes, aligning the setup with real-world deployment scenarios.

We unify the tasks of semantic shift OOD detection and open-set recognition (OSR) into a single test task to evaluate the model’s ability to handle semantic shifts.

ID Classes: We use the 94 classes as ID classes, defined in

./sub-dataset1-RGB-domain1/OOD_split/ID_94.txt.Training/Test Sets: Organized in

./sub-dataset1-RGB-domain1/ID/trainand./sub-dataset1-RGB-domain1/ID/test.

|  |

|---|

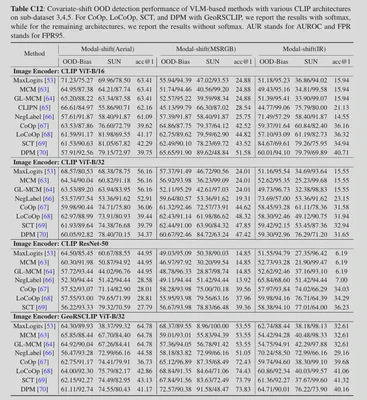

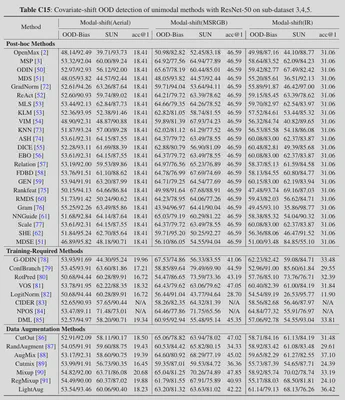

Covariate Shift OOD Detection & Generalization

Covariate shift OOD detection emphasizes robustness to covariate shifts, also referred to as full-spectrum OOD detection, where the ID data remain semantically consistent, while covariates vary. Given the practical needs of remote sensing, we focus on the following shifts:

- Resampling bias, requiring model generalization across varying acquisition parameters (angle, height, resolution, time) within the same modality;

- Modal shift, demanding generalization across different modalities (satellites, aerial images) for the same semantic categories.

For each dataset exhibiting domain shift relative to Sub-Dataset 1, we define the following test tasks:

1. Resampling Bias Scenario

- ID Test Set: Resampled from Sub-Dataset 2 (

./sub-dataset2-RGB-domain2/ID/test). - ID Train Set: Unchanged from Sub-Dataset 1 (

./sub-dataset1-RGB-domain1/ID/train).

OOD Datasets:

OOD-Easy: 48 classes from Sub-Dataset 1 (minor shifts).OOD-Hard: 47 classes from Sub-Dataset 1 (significant shifts).Bias-OOD: 22 classes from Sub-Dataset 2 (shifts). Path:./sub-dataset2-RGB-domain2/OOD/test.SUN: As above.

|  |

|---|

2. Aerial Modality-Shift Scenario

- ID Test Set: Aerial data from Sub-Dataset 3 (

./sub-dataset3-Aerial-domain3/ID/test). - Train Set: Unchanged (Sub-Dataset 1).

OOD Datasets:

Bias-OOD: 66 classes from Sub-Dataset 3. Path:./sub-dataset3-Aerial-domain3/OOD/test.SUN: As above.

3. MSRGB Modality-Shift Scenario

- ID Test Set: MSRGB data from Sub-Dataset 4 (

./sub-dataset4-MSRGB-domain4/ID/test). - Train Set: Unchanged.

OOD Datasets:

Bias-OOD: 22 classes from Sub-Dataset 4. Path:./sub-dataset4-MSRGB-domain4/OOD/test.SUN: As above.

4. IR Modality-Shift Scenario

- ID Test Set: IR data from Sub-Dataset 5 (

./sub-dataset5-IR-domain5/ID/test). - Train Set: Unchanged.

OOD Datasets:

Bias-OOD: 26 classes from Sub-Dataset 5. Path:./sub-dataset5-IR-domain5/OOD/test.SUN: As above.

|  |

|---|

Incremental Learning

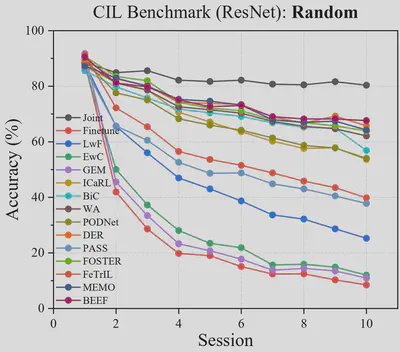

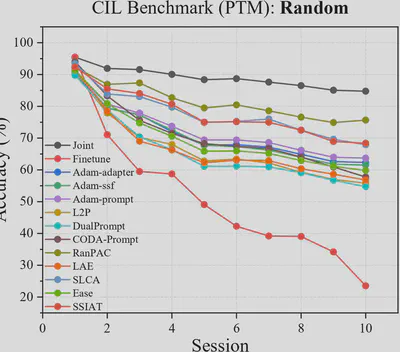

Class Incremental Learning (CIL)

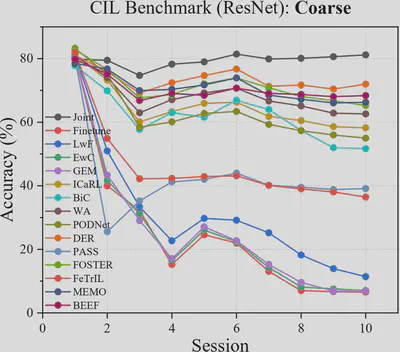

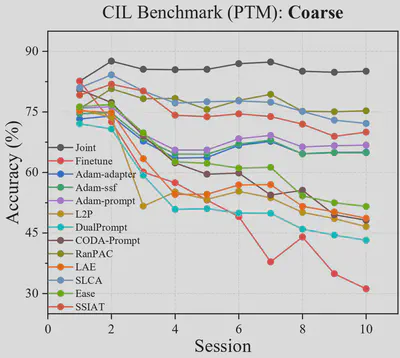

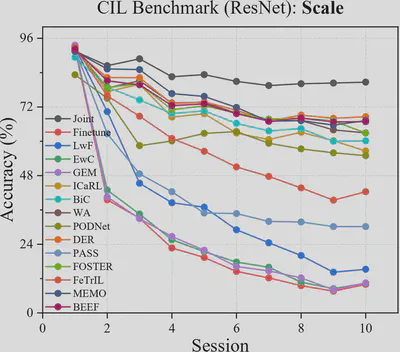

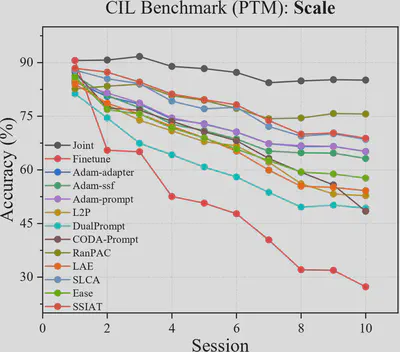

The rapid advancement of remote sensing generates vast amounts of high-quality images daily, necessitating models to recognize novel classes in open-world scenarios. However, existing CIL benchmarks in remote sensing are constrained by limited category diversity, restricted coarse-grained coverage, and uniform data scales, inadequately capturing real-world complexities. To address these limitations, we evaluate existing CIL methods using three benchmarks:

Random, which follows the widely-used CIL setting and randomly assign classes to 10 sessions equally.

Coarse, setting each session to contain fine classes of one coarse category to simulate the continuous learning from data captured by different types of dedicated satellites by the model. We divide all the classes into 10 coarse categories corresponding to 10 sessions. The detailed information of the dataset split is defined in

./sub-dataset1-RGB-domain1/CIL_split/CIL_coarse_split.json.

Scale, which aims to replicate the continual process from large to small scales. The 10 sessions are evenly distributed categories based on a progression from large to small scales. The detailed information of the dataset split is defined in

./sub-dataset1-RGB-domain1/CIL_split/CIL_scale_split.json.

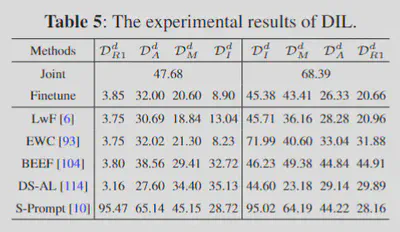

Domain-Incremental Learning (DIL)

To assess the model’s adaptability to data from different domains, we benchmark DIL on OES. We select 50 categories containing the same semantic classes from RGB satellite (Sub-dataset 1), RGB aerial (Sub-dataset 3), MSRGB (Sub-dataset 4) and IR images (Sub-dataset 5).

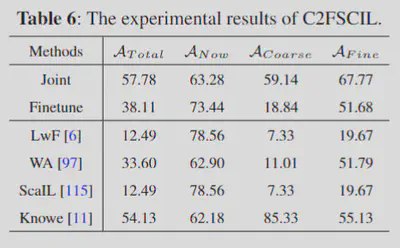

Coarse-to-Fine Few-shot Class-Incremental Learning (C2FSCIL)

In C2FSCIL, we provide the model with all training samples accompanied by coarse labels in the base session, including 10 coarse-grained classes and 189 fine-grained classes. In the subsequent incremental sessions, we introduce samples with fine labels for each of the 10 coarse classes, supplying only 5 samples per class at each session, which is consistent with the few-shot setting. The initial training phase learns all 10 coarse classes, while each subsequent incremental phase introduces 20 fine-grained classes.

If you develop your own algorithms to test on OES, please consider building upon OpenHAIV, an open-source framework that we use to perform the above tasks for open-world sensing.